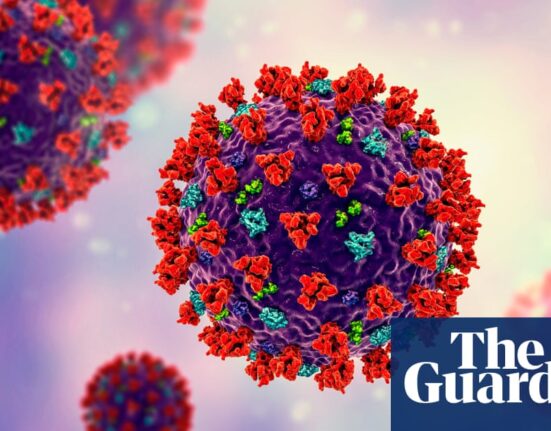

The big picture: If there’s one thing that generative AI is supposed to be good at, it’s analyzing the written word. However, two studies suggest that this ability may have been overhyped. One study demonstrates that Gen AI struggles with understanding long-form books, while another shows that these models find answering questions about videos challenging. This is something companies should consider as they augment their workforce with Gen AI.

Generative AI has struck fear in the hearts of creators of all types, but particularly for those who deal with the written word. Freelance work for copywriters has been drying up, largely due to the number of GenAI engines that have sprung up in recent months. Other forms of gig work have been affected too, despite the growing realization that AI isn’t entirely living up to its original hype.

Two new studies show some of the limitations of these chatbots, revealing they may be more extensive than previously realized. Both studies examine how well GenAI can make sense of enormous amounts of data. Specifically, one tested the ability of AI language models to understand and continue long stories, evaluating how well these models can comprehend and build upon extended narratives beyond typical short-range processing.

For one book of 520 pages, the researchers found that Gemini 1.5 Pro answered the true/false statements correctly 46.7% of the time, while Gemini Flash answered correctly only 20% of the time.

The other study focused on evaluating the performance of vision language models. Both studies found that AI falls short, including Google’s latest Gemini generative AI models, which emphasize their ability to process and analyze large amounts of data as their selling points.

For example, Gemini 1.5 Flash can analyze one hour of video, 11 hours of audio, or more than 700,000 words in one query, according to Google. In a presentation to journalists, Google showed how it could analyze a 14-minute video in one minute. But its grasp of the context – at least the long-form written context – is suspect, according to Marzena Karpinska, a postdoc at UMass Amherst and a co-author on one of the studies. “While models like Gemini 1.5 Pro can technically process long contexts, we have seen many cases indicating that the models don’t actually ‘understand’ the content.”

Karpinska, along with researchers from the Allen Institute for AI and Princeton, asked the models to evaluate true/false statements about recent fiction books, asking about specific details and plot points.

For one book around 260,000 words, or 520 pages, the researchers found that Gemini 1.5 Pro answered the true/false statements correctly 46.7% of the time while Gemini Flash answered correctly only 20% of the time.

GPT-4 achieved the highest accuracy at 55.8% on the NoCha (Novel Challenge) dataset. The study also found that the model-generated explanations for their decisions were often inaccurate, even for correctly labeled claims.

“We’ve noticed that the models have more difficulty verifying claims that require considering larger portions of the book, or even the entire book, compared to claims that can be solved by retrieving sentence-level evidence,” Karpinska said. “Qualitatively, we also observed that the models struggle with verifying claims about implicit information that is clear to a human reader but not explicitly stated in the text.”

In the second study, researchers found that across various tasks, including mathematical reasoning, visual question answering (VQA), and character recognition, a diverse set of VLMs struggle as the visual context length increases. In general, current state-of-the-art VLMs have difficulty ignoring irrelevant information when answering queries in long visual contexts.

The co-authors created a dataset of images, such as a photo of a birthday cake, paired with questions for the model to answer about the objects depicted in the images. They picked one of the images at random and inserted “distractor” images before and after it to create slideshow-like footage.

“On real question-answering tasks over images, it appears to be particularly hard for all the models we tested,” Michael Saxon, a PhD student at UC Santa Barbara and one of the study’s co-authors, said. “That small amount of reasoning – recognizing that a number is in a frame and reading it – might be what is breaking the model.”

Here too, Gemini Flash didn’t perform well when asked to transcribe six handwritten digits from a slideshow of 25 images, getting around 50% of the transcriptions right and 30% with eight digits.