In brief: GPUs have memory limitations when facing the demands of AI and HPC applications. There are ways around this bottleneck, but the solutions can be expensive and cumbersome. Now, a startup headquartered in Daejeon, South Korea, has developed a new approach: using PCIe-attached memory to expand capacity. Developing this solution required jumping through many tech hoops and there are still challenges ahead. Namely, will AMD, Intel, and Nvidia support the technology?

Memory requirements stemming from advanced datasets for AI and HPC applications often swamp the memory built into a GPU. Expanding that memory has typically meant installing expensive high bandwidth memory, which often introduces changes to the existing GPU architecture or software.

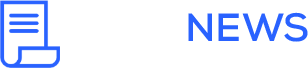

One solution to this bottleneck is being offered by Panmnesia, a company backed by South Korea’s KAIST research institute, which has introduced new tech that allows GPUs to access system memory directly through a Compute Express Link (CXL) interface. Essentially, it enables GPUs to use system memory as an extension of their own memory.

Called CXL GPU Image, this PCIe-attached memory has a double-digit nanosecond latency that is significantly faster than traditional SSDs, the company says.

Panmnesia had to overcome several tech challenges to develop this system.

CXL is a protocol that works on top of a PCIe link, but the technology has to be recognized by an ASIC and its subsystem. In other words, one cannot simply add a CXL controller to the tech stack as there is no CXL logic fabric and subsystems that support DRAM and/or SSD endpoints in GPUs.

Also, GPU cache and memory subsystems do not recognize any expansions except unified virtual memory (UVM), which is not fast enough for AI or HPC. In tests by Panmnesia, UVM performed the worst among all tested GPU kernels. The CXL, however, provided direct access to expanded storage via load/store instructions, eliminating the issues hampering UVM such as overhead from host runtime intervention during page faults and transferring data at the page level.

What Panmnesia developed in response is a series of hardware layers that support all of the key CXL protocols, consolidating them into a unified controller.

The CXL 3.1-compliant root complex has multiple root ports supporting external memory over PCIe and a host bridge with a host-managed device memory decoder that connects to the GPU’s system bus and manages the system memory.

There are other challenges that Panmnesia is facing that go beyond its control, a big one being that AMD and Nvidia must add CXL support to their GPUs. It is possible that industry players decide they like the approach of using PCIe-attached memory for GPUs – and go on to develop their own technology.